A lot of customers have lagging slaves. It could be one of the top issues at support, due to the infamous row based replication without primary key issue :

| Bug #53375 | RBR + no PK => High load on slave (table scan/cpu) => slave failure | ||

|---|---|---|---|

If you use binlog_format = statement or mixed , there are several ways of monitoring the SQL thread. The most ancient is the log-slow-slave-statements variable. From 5.6.11, it is a dynamic variable, before that you had to restart the slave mysqld to enable it.

Once on, you can trace what’s going on in the SQL thread and analyze the slow query log. Of course, as the SQL thread could be running long write queries or a lot of fast queries, so it is crucial to set long_query_time = 0 for say 60 seconds to catch the fast writes. Fast does not mean that they can not be optimized :-).

From MySQL 5.6.14, there is even better. After this bug fix : Bug 16750433 – THE STATEMENT DIGEST DOES NOT SHOW THE SLAVE SQL THREAD STATEMENTS, the Performance_schema statement digests are showing the SQL thread statements as well !

So that ps_helper / mysql_sys can be used to see what’s going on in the SQL thread.

Now an real example :

If you see this kind of things : show global status like ‘Handler%’ or in the show engine innodb status :

|

1 2 3 |

Handler_read_next: 1,573,551,696 (551,543/sec) Number of rows inserted 172099, updated 685966, deleted 137734, read 1574302296 32.14 inserts/s, 208.76 updates/s, 28.84 deletes/s, 547765.56 reads/s |

500k index records scan per second is a bit too much for a (single) thread. No wonder that

Seconds_Behind_Master: 76162

is large and increasing.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

mysql> select * from statement_analysis ; +-------------------------------------------------------------------+-----------+-----------+------------+-----------+------------+---------------+-------------+-------------+--------------+-----------+---------------+---------------+-------------------+------------+-----------------+-------------+-------------------+----------------------------------+---------------------+---------------------+ | query | db | full_scan | exec_count | err_count | warn_count | total_latency | max_latency | avg_latency | lock_latency | rows_sent | rows_sent_avg | rows_examined | rows_examined_avg | tmp_tables | tmp_disk_tables | rows_sorted | sort_merge_passes | digest | first_seen | last_seen | +-------------------------------------------------------------------+-----------+-----------+------------+-----------+------------+---------------+-------------+-------------+--------------+-----------+---------------+---------------+-------------------+------------+-----------------+-------------+-------------------+----------------------------------+---------------------+---------------------+ | UPDATE t ... c1 = ? AND c2 = ? | prod | | 13904 | 0 | 4896 | 00:06:06.71 | 4.75 s | 26.37 ms | 1.26 s | 0 | 0 | 1492198 | 107 | 0 | 0 | 0 | 0 | a36a0c617b2f696cb4889ee015541b65 | 2014-03-05 08:02:25 | 2014-03-05 08:13:30 | | UPDATE t ... c1 = ? AND c2 = ? | prod | | 2024 | 0 | 0 | 00:01:34.49 | 2.57 s | 46.69 ms | 147.32 ms | 0 | 0 | 373785 | 185 | 0 | 0 | 0 | 0 | 8dd4ee22a216fe5fcc03b03a34f3a3fd | 2014-03-05 08:02:36 | 2014-03-05 08:13:30 | | UPDATE t ... c1 = ? AND c2 = ? | prod | | 1662 | 0 | 0 | 00:01:31.54 | 3.31 s | 55.08 ms | 65.96 ms | 0 | 0 | 317512 | 191 | 0 | 0 | 0 | 0 | 27a13609455481e156ca9eb2e5c54d6d | 2014-03-05 08:02:53 | 2014-03-05 08:13:29 | | UPDATE t ... ? , c3 = IF ( ... | prod | | 1987 | 0 | 1064 | 41.06 s | 1.11 s | 20.66 ms | 206.36 ms | 0 | 0 | 206869 | 104 | 0 | 0 | 0 | 0 | f812e322c0e3dfaf905fe1d624978564 | 2014-03-05 08:02:42 | 2014-03-05 08:13:29 | | UPDATE t ... c1 = ? AND c2 = ? | prod | | 680 | 0 | 0 | 39.11 s | 1.91 s | 57.51 ms | 62.61 ms | 0 | 0 | 144791 | 213 | 0 | 0 | 0 | 0 | a9266204238e66806ca4c073650b72e5 | 2014-03-05 08:05:37 | 2014-03-05 08:13:22 | mysql> select * from io_global_by_file_by_latency ; +-------------------------------------------------------------------------------------+------------+---------------+------------+--------------+-------------+---------------+------------+--------------+ | file | count_star | total_latency | count_read | read_latency | count_write | write_latency | count_misc | misc_latency | +-------------------------------------------------------------------------------------+------------+---------------+------------+--------------+-------------+---------------+------------+--------------+ | @@datadir/prod/t.ibd | 244505 | 00:05:58.94 | 244319 | 00:05:58.83 | 114 | 4.56 ms | 72 | 105.85 ms | |

The slave was up for 12 min and nearly 10 min was spent on the updates ! 18000 updates in 10 minutes, that’s fast 🙂 So do we need to buy better hardware ?

No. Average rows examined is between 100 – 200. Maybe we can optimize it ?

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

mysql> explain update t set c3 = 1 where c1=6577849127655212045 and c2 = '2014-03-04'\G *************************** 1. row *************************** id: 1 select_type: SIMPLE table: t type: index_merge possible_keys: idx_c1, idx_c2 key: idx_c1, idx_c2 key_len: 8,3 ref: NULL rows: 3 Extra: Using intersect(idx_c1,idx_c2); Using where 1 row in set (0.00 sec) |

Indeed, adding an index on (c1,c2) fixed the issue. And cut by 100 the rows examined average. After one hour, the slave that was lagging 1 day had caught up.

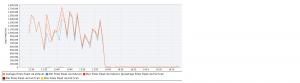

This is the MEM graph for the rows_examined.

Thank you to Hauns Froehlingsdorf who worked with me on this issue.

Things to note here :

- the performance_schema rocks for statement replication. I truly hope that row based replication will get the instrumentation it deserves. Maybe row events could appear as statements ? That’s how they are processed by the parser when you do point-in-time recovery for example …

- index merge used for updates is much more expensive that it seems : it is not certainly not 3 rows_examined, not 100-200 much more here. Index entries are locked for 2 index ranges, so more locks in memory in REPEATABLE READS isolation at least. Here are the 2 bugs for completeness :

- Bug 14226987 – ROWS_EXAMINED IS NOT CORRECT FOR INDEX MERGE QUERIES

- Bug 14226171 – EXCESSIVE ROW LOCKING WITH UPDATE IN 5.5.25